- Chapter 1: Identification Of Fake News Using Real-Time Analytics

- 1.1. Introduction: Identification Of Fake News Using Real-Time Analytics

- 1.2. Background

- 1.3. Aim and Objectives

- 1.4. Research questions

- 1.5. Rationale

- 1.6. Problem statement

- 1.7. Scope of the research

- 1.8. Significance of research

- 1.9. Dissertation structure

- Chapter 2: Literature Review

- 2.1. Introduction

- 2.2. Concept of fake news detection

- 2.3. Impact of fake news detection

- 2.4. Challenges of fake news detection

- 2.5. Identification Approaches of Fake News

- 2.6. Application of real-time analytics in fake news detection

- 2.7. Implementation of Fake news detection using machine learning

- 2.8. Related Work

- 2.9. Methods based on Intervention

- 2.10. Summary

Chapter 1: Identification Of Fake News Using Real-Time Analytics

1.1. Introduction: Identification Of Fake News Using Real-Time Analytics

Click here to access top-notch assignment help provided by our native assignment help company.

Nowadays in the fast-moving and technologically evolving world, most of the things in human life are dependent on the computer, mobile, and the internet. The daily news all around the world is now spreading via online websites, social media, news channel apps, etc.

Fake news term mainly refers to the articles or information that are posted on online publication websites and social media knowing that the statements are wrong or influenced intentionally by someone. Because of this false news which is read by the common people, fake information goes viral in between public and also on social media. But nowadays to stop the spreading of fake news via online websites and social media certain methods involve real-time analysis, developed to verify the credibility of the news so people can get the actual and proper statement and information. However, the approaches for detecting fake news are also inadequate, and automatically pinpointing the particular news from a large amount of data present on websites and social media is somewhat difficult. However, the development in the methods for detecting fake news is still growing significantly the improvement in this field is going on.

1.2. Background

In this technologically advanced world, the necessity of the computer, mobile internet, and social media is inhabitable. Nowadays people read news not only in the newspaper and TV but also use online websites and social media so the misuse of technology is also done to spread fake news vis online and make it viral among the general public this fake news spreading is not a tolerable issue so first the understanding of the fake news generation is very important along with the understanding of the essential factors that influence the fake news production. The discussion between some authors about this problem revealed that there are some scientific solutions to the impact and the “effect of fake news on society and the existing traditional methods for detecting fake news” are not commensurate in case of the enormity of this problem (Zhang et al. 2019).

Nowadays there are some methods developed for detecting and checking the credibility of fake news by using modern tools and technologies. Some examples of these methods are using the network analysis method to “detect and reduce the number of fake news” in online publication websites and social media, authors can build the network credibility with the help of conflicting relations to detect fake news via tweets, using machine learning techniques and “Boolean crowd-sourcing” algorithms the detection of the fake news is possible. Google now announced the “Google News Initiative” for supporting fake news spreading as well as “Facebook” and “Wikipedia” are also developing to create a proper solution in this matter mitigating outbreak of the fake news spreading and using a blockchain approach to the identification of fake news and prevention is also developing rapidly towards solving this problem (Pulido et al. 2020).

1.3. Aim and Objectives

1.3.1. Aim

The actual aim of this project is to properly identify fake news with the help of real-time analysis and devise an impactful solution to this problem for fake news detection and prevention (Sharma and Sharma, 2019).

1.3.2. Objectives

The objectives of this project are as follows-

- To evaluate fake news with the help of real-time analysis

- To analyze whether news is fake or not by using the exploratory analysis of data.

- To implement machine-learning methods and models to detect the fake news on the COVID-19 dataset and explore all the patterns of the dataset

- They recommended a practical and impactful approach to prevent the spreading of fake news via online websites and social media

1.4. Research questions

There is some research question that can arise when the research is to devise a solution to this problem. They are as follows-

- How to evaluate fake news with the help of real-time analysis?

- How to analyze whether the news is fake or not by using the exploratory analysis of data?

- How to implement machine-learning methods and models to detect the fake news on the COVID-19 dataset and explore all the patterns of the dataset?

- What are the recommended practical and impactful approaches to prevent the spreading of fake news via online websites and social media?

1.5. Rationale

Before coming to social media and the internet, people were getting the news and information from television, radio, and the newspaper. After socializing on the internet this news medium is shifted to online which is basically “social media like Facebook, Instagram, Twitter, and other social media”. And therefore, social media is spread all over people and become a habitual form (Zhang et al. 2019). The excessive use of social media has a bad impact on social life with some wrong news or fake news and this fake news increases with social media which is now a big issue of this generation. With the help of fake news, some bad people damage the reputation of a well-known institution for their bad intuition and it engages the institution and the institution-related people in a very dangerous situation. In the current scenario, fake news is the most dangerous myth that prevents people from knowing the truth and also blanked people without any information (Pulido et al. 2020). The spreading of fake news has become a real challenge for individuals during covid-19 pandemic.

YouTube which is an online video-sharing and social media platform and the world's second most visited platform is now in the main territory for the biggest culprits where they can easily and confidently share rubbish and fake information with the people. And with those scammers, the new generation has also started sharing some fake and unconditional content on YouTube for some views and subscribers. False information or fake news appeals to some sentiment analysis, the collection of information retrieved and also extracted. Google which is the world's number one search engine and where all information can be, now also is under the fake news issue where some people spread fake news for their financial and political benefits. Here in this project, the main aim is to detect this type of fake news and replace it with the right one with the help of machine learning or real-time analysis (Sharma et al. 2019).

1.6. Problem statement

With increasing the “fake news on social media, it is necessary to stop immediately” from social media platforms. At present time the developer and the computer scientist trying to find the solution to this problem with machine learning or real-time analytics. Here use some specific high-level algorithms to identify fake news in machine learning and now artificial intelligence is also joined with machine learning to help in this case. But for this high-level and complex algorithm identifying fake news becomes trickier and also another reason the identification of fake news is not an easy task (Paka et al. 2021). There are lots of problems that come with the front during the identification of fake or false news. Sometimes the people and the AI cannot fake news sources and cannot check the proper site because some recognize news journalism sources never produce false or fake news and the scammer somehow manages to spread fake news through the mainstream of the news sources.

The URL is the main key to running a website, and there are also some scams by scammers to “spread fake news because sometimes the URL of the fake news is exactly the real and genuine URL such as com. co” which creates problems in fake news identification (Meel et al. 2020). The speedreading of this kind of fake URLs has become common after the COVID-19 pandemic. Sometimes a news source looks like a genuine source but after that, the author does not exist and basically, it confuses the process of fake news identification. Here “artificial intelligence and machine learning face problems to detect the fake news” when it is a single or simple content program. When it comes to explaining the subtlety of human language and thinking, artificial intelligence, and machine learning fail sometimes to get an accurate result in the identification of fake news. At present time there is much fake news about one subject which adds a huge amount same data of fake news, and for this reason, sometimes “machine learning fails to detect fake news” because real-time analytics process the identification process to examine the data, dates, and all other sources of the fake news.

1.7. Scope of the research

The spreading of fake news has a significant number of risks and an impactful effect on society in a very bad way which sometimes includes national security issues. A very good example of spreading deliberately misleading information is influencing an individual’s thoughts about another individual or the results of the election. So, to prevent this problem the help of real-time analysis is derived in this research project where the description of the conceptual mathematical underpinnings taken from the analytical model to “detect the credibility of the news” (Qayyum et. al. 2019). Also, machine-learning models are used to develop for preventing the “spreading of fake news via social media and websites” and the Boolean-value functions are also able to distinguish fake articles and events from legitimate ones. The research on detecting fake news is in the beginning phase as this spreading of fake news becomes more hindrance and a serious threat to our society to society. Recently in the past year 2020, the spreading of COVID-19 Around the whole world became the largest international health issue. In that phase of a crisis where everyone is in a lockdown state all the regular information and the news are given by online websites, social media, and news channels. So, any sort of misleading information to the general public could be very risky. Fake news produces a misinterpreted perception of the actual reality among the general public, any individual, or enterprise (Shu and Liu, 2019). The study and research about the real-time-analytics-based approach for the prevention of spreading fake news are classified into a mechanism to make a group of legitimate data into different topic clusters. The scope of this research is to come up with an impactful and permanent solution to prevent the spreading of fake news through online websites, and social media like WhatsApp, Facebook, Wikipedia, etc. because this fake news spreading becomes a real issue for everyone.

1.8. Significance of research

At present, people can go to every news site and also browse the site freely after improving technology. People are divided into two parts which are pro-government and anti-government, and use this condition some reporters show false news for their political gain which stops people from questioning it, and the people who do not think logically, are misled by the false news easily. With the help of the fake news detection process, these people can get out of these fake news circles which cause division in the social community. Media literacy is very important to spread and receive the news and with the help of real-time analysis, the media organization can “prevent the spread of fake news” (Singh et al. 2021).

Social media is a platform where people can learn a new skill, especially in the current situation like COVID-19 when people cannot get out of their homes and are using “machine learning and artificial intelligence to prevent the spread of fake news”. In the COVID situation, there was a lot of fake news about it which caused damage or even death to the people by the fake news, here using real-time analysis the developers can able to detect the true news and get a solution for the people. After coming to these types of false news detecting processes people can connect with the proper one and also it can spread positivity in society. People now can get current and quick information about a specific matter or the local information or news by removing fake or false news with the help of real-time analysis. Some people who blindly believe on social media or the internet are the easy victims of scammers who spread fake news and they make some bad decisions based on false news which leads them into unsocial activity the fake news detection process can also help to overcome these bad situations (Qayyum et al. 2019). The main thing is that without real-time analysis of fake news society and people never come out of the trap of these blunders.

1.9. Dissertation structure

Figure 1.9.1: Dissertation structure

Chapter 2: Literature Review

2.1. Introduction

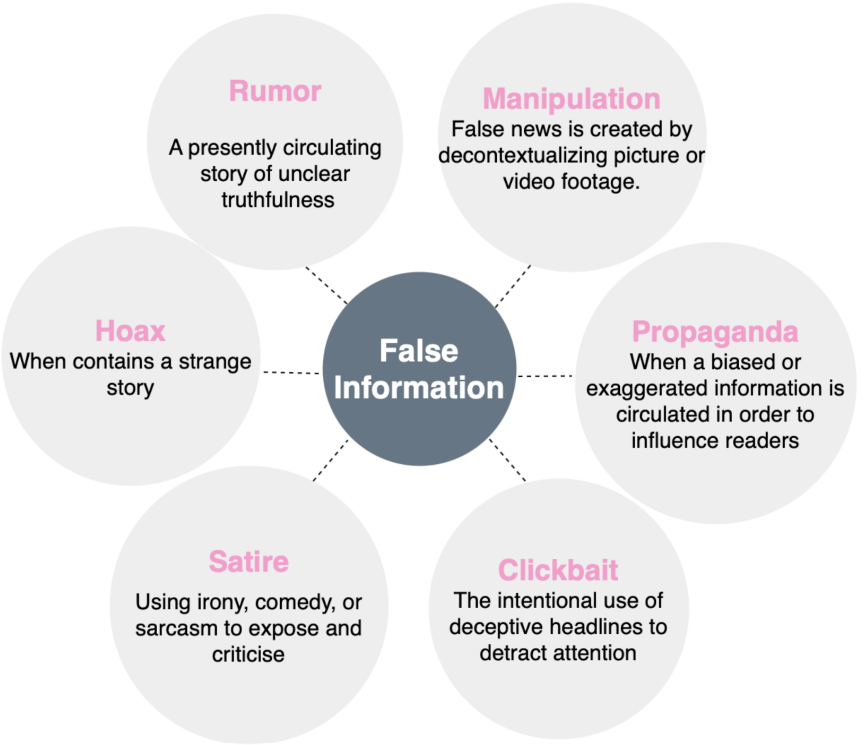

Fake news detection is the concept of detecting news that does not have any connection to a real-time incident and is just a rumor that has been created by an individual or a group of people to create chaos or violence among the common people. The spreading of fake news in recent times is increasing very rapidly and the most difficulty that fake news creates is to detect it in its initial phase. One of the major sources that help in spreading fake news is social media which in recent times has become a major source to get news but its major drawback is that it does not detect whether the news is real or fake. Fake news detection can be done with the help of the many latest and most advanced technologies like machine learning and the methods of real-time analytics of the news can help to detect the fake news very precisely. One of the major drawbacks of the system is it is very difficult to report and analyze fake news as the fake news can be of many forms such as in the form of clickbait, rumor, hoax, parody, satire, and other deceptive news. It is very hard to categorize the type of fake news for the technology or the method that affects the accuracy rate of fake news detection.

2.2. Concept of fake news detection

The “concept of fake news detection is to be done to cope with the major problem of spreading fake news”. The major area in which the concept of fake news detection needs to be implemented is social media which has become a major source of spreading fake news. The reason for becoming a major source because that Facebook does not include real-time analytics and also does not check that the news is real or fake before uploading it to its server (Zhang et al. 2019). The concept of fake news detection can be implemented with the help of real-time analytics and also with the help of the latest technology of AI-driven machine learning which helps to “detect fake news in real-time by using the methods of natural language processing (NLP) and machine learning algorithms” to analyze the text by implementing the known common patterns. Real-time analytics of the news can be defined as the process of collecting, interpreting, and analyzing the data at the time it has been created (Pulido et al. 2020). ML is one of the advanced technologies that has the features to permit the computer to learn from the provided without the implementation of any type of program which helps this powerful technology to accurately and easily detect fake news. The first step in real-time analytics “with the help of machine learning is to instruct an algorithm such that it can differentiate between real and fake news”.

This can be implemented for the image data with the help of a combination of different “machine learning algorithms that are such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs)”. Both algorithms have different features as CNNs have the feature to effectively specify the particular object in the image whereas RNNs can learn and analyze from the sequence of the images and videos (Paka et al. 2021). The use of natural language processing in machine learning algorithms is done due to its ability to enable the system for speech understanding as well as speech generation. The other feature it gives is that it can utilize any type of language to perform any type of action for the desired result. The algorithms of machine learning that can help in detection of the fake news more precisely and accurately are such that the algorithm of the decision tree which is a very important part of machine learning works on the model flow chart basis which is mainly used for classifying the problems. It archives high accuracy with the help of predictive models using supervised learning methods (Meel and Vishwakarma, 2020). The other algorithms that are used in machine learning to achieve accuracy in detecting fake news are the algorithm of “random forest, the algorithm of support vector machine, the algorithm of naive Bayes, and the algorithm of k-nearest neighbors” which helps the machine learning.

2.3. Impact of fake news detection

The impact of fake news detection has brought great positivity in the world as it is known that the rapid growth of fake news has been devastatingly affecting the lifestyle of the people by spreading such type of fake news which creates chaos in the life of the people as well as among the people (Bondielli and Marcelloni, 2019). The impact can be analyzed with the help of the data that is present by the previous research so that it can be measured. Measuring the previous impacts of fake news detection and comparing it with the present analysis of “fake news detection can help to conclude that the implementation of fake news detection with the help of the latest technology” is how effectively reducing the spread of fake news. Implementing the different algorithms and methods of machine learning can help to increase the impact of fake news detection such as the method of combining the news content and social context features for fake news detection which outperforms its previous method of fake news detection and the rate of increase of the accuracy of this method is 78.8%" (Shu et al. 2019). When this method is implemented in the Facebook and messenger chatbot the validation with real-world applications has an impact that its accuracy to detect fake news has increased to 81.1%. The impacts can also be analyzed by implementing the method of data collection and analysis of fake news. The news can be collected from any type of website, search engine, and social media. The detection of news that is fake or real is a very challenging task as it is very hard to find the context and source, and checking the facts is not possible manually (Kula et al. 2020).

So modern fake news detection tools help the researcher very efficiently by automatically detecting the sequence of the data of news and as well checking all the aspects automatically that are required to detect whether the “news is real or fake”. The main impact of fake news detection it has created awareness among the people so that they can understand the harmful effects of fake news. The people also realized that they should not blindly believe in any type of news and try to “verify whether the news is real or fake”. The fake news impacts can be understood by looking at the analysis of the recent pandemic of covid 19 in which much fake news has arisen about the virus, the ways to cure it, and also about the vaccination which has affected the lives of the person who suffered and, in some case, it has resulted to the death. So, later with the help of “fake news detection, the fake news has been detected” and the persons realized and took the necessary steps to cure the disease of covid 19 (Monti et al. 2019). The “help of the latest technology of machine learning” helps to create more precise and accurate fake news detection tools which help to improve the accuracy of the detection method.

2.4. Challenges of fake news detection

The development and implementation of a new tool or software always give challenges that sometimes become the roadblock to the way of implementing the tool so that it can help to reduce the effect of the problem for which it is made. The challenges during the implementation of fake news detection have also arisen. Challenges are such that first of all the analysis of the news is the most challenging task such that sometimes it is very difficult to categorize the fake news that it is of which type. The other challenge that is faced is that the detection software is made up of the latest technology like machine learning which is not an easy task to operate (Meinert et al. 2018). So, it requires deep knowledge and well training for the operation and implementation. So, the lack of skilled persons who can operate it smoothly without any type of error is very low, so during the implementation and management, it gives the major challenge for fake news detection. The challenges in the detection of fake news are such that analyzing the platforms through which the data or the sources of the news are spreading can help to find the sources of the news by which it has come out.

Figure 2.4.1: Challenges of fake news detection

The analysing the data of the news and also have to look at the sensitive topic it contains so that it can help to analyse what impacts it would be made on the mind of the reader which is a major challenge to the detection tools because the data it contains is a very large amount and to particularly find the sensitive content is a very difficult task. The available datasets are very limited amounts so the major challenge arises that it becomes very difficult to detect fake news based on the previous datasets is not possible for the detection tools and later it has to be done manually by the researchers which is very time-consuming and requires a lot of times for it which results in the spreading of the fake news (Ajao et al. 2018). The pandemic of the covid 19 has occurred recently and it does not have any previous datasets that can help to analyse the fake news about the disease which results in the spreading of fake news at a very rapid speed and nobody can do anything about that. The detection tool also cannot be able to help to “reduce or to stop the spreading of fake news” about the pandemic of the covid 19 because it can also be done with the help of matching the patterns with the previous data that is available in their database (Gangireddy et al. 2020). The crashing of the software is also one of the common challenges that occur during the detection because as it is software crashing of software can happen anytime due to many factors such as the insertion of the virus during the data collection, some minor or major faults in the system, bugs that result in the software malfunctioning.

2.5. Identification Approaches of Fake News

Due to the availability of modern technology, the news is available in real-time, helping everyone too much. But here the scenario becomes complicated when the shared news is not valid or partially accurate In this case there can be serious problems like rumours. It could spread and can impact the social life of citizens. To deal with such a type of problem it is very important to identify the real news from the fake one. This identification can be done in the following steps: they are the identification of the site. In this step, the site that is sharing the information or news users should check the authenticity and validity of the news-providing website. Users need to confirm whether the website that is sharing the news is valid or not and for accepting any news the real websites must be considered only.

Some of the fake news-providing websites use the same domain name as an original domain to mimic them to get rid of this problem users must check the endings of the website be .co or.com if the endings of the website differ from the original website, then it should be considered as the fake news sharing website.

Good news stories always use a reputable or authenticated source for their reference and the video, image, or clips for the different news sources are those who authenticate their source before representing that. For this, the report becomes trustable for every user but in the case where the news media claims that the reference is used valid or not is not checked in that case if the user has no option to verify that case user must avoid it as fake news. When there is doubt about the news users must use the fact-checking process of the website for this they can use a simple fact-checking website like Politifact, Factcheck Detection of any fake news can be done by doing some little observations. For this users must check the news titles whether they are over catchy or not. The images, videos, and sources of the news media authenticity by showing this little care users can identify potentially harmful news that is fake.

Another fact-checking method is to work with Wikipedia because Wikipedia is a good source of fake news-checking websites and it updates the list of fake news-sharing websites regularly. To take benefit of this relevant feature Wikipedia users must cross-check the website name with the list users must do this because fake news-sharing websites not only share fake news they also share potentially harmful malware. Another fact-checking helper is googled. To deal with fake news google introduced the fact-checking tool in their toolbox in the year 2022. More than 150,000 fact-checking resources are available on Google to reach the authenticated source of the news to use this user must enter the topic or title anime in the search bar thereafter google produces the results from the resources available from their data warehouses and if the information is not matching with their resources, they show a suggestion as the information may be fake and they warn the user to be alert and avoid about that news (Apuke et al. 2021).

2.6. Application of real-time analytics in fake news detection

Fake news is a problem that has existed since previous times and this problem increased since the years 2016 before the election of America (Van Der Linden et al. 2020). Machine learning is very helpful “for the detection of fake news” and marking it in faster processes as the no of fake news is more than the previous time. In machine learning the “TF-IDF vector technique”, and “logistic regression technique” are very helpful for doing this. When the “TF-IDF technique” model was used for extracting the fake news it performed far better than the linear models and simulated the real news. The simplest method for “fake news detection is the representation of data by text using the bag of words process” In this method the data for the news media each word is scrutinized because every word is important for the real-time detection of them and this multiword or “n-gram model” is used in this process the frequency for every word is added for analyzing the inaccuracy. “Shallow Syntax” or “Parts of Speech” is very effective for detecting the inaccuracy of linguistics this also helps in the process of detecting fake news through real-time analytics (Zhou et al. 2019).

Figure 2.6.1: Architecture of real-time fake news detection system

The simplicity of the language is also important in real news content but in the case of fake news when they use some vague or ambiguous speech the “isolated n-grams model” detects them as suspecting content. Many data analytic researchers found this tool very helpful for detecting fake news in real time. Word analysis is not enough for determining the deception for this the syntax for the language is checked to determine the deception. “Deep syntax analysis” is used by the “PFCG (probability context-free grammar tool)”. In this process, the sentence is modified into some set of rewriting rules for describing the structure of the syntax and in this process, the noun and verb are turned into the “syntactic constituents’ part”. The application of the method is to determine the differences between the categories of the rule with an accuracy of this method of 85-91% (Egelhofer et al. 2019).

“Stanford Parser”, “MacCartney”, “Manning,2006”, and “AutoSlog-TS syntax analyzer” These third-party tools are good for automation in real-time analytics. Only syntax analysis is not enough to detect the deception for this the combined approach with tools like “network analysis techniques” is very helpful. In real-time analytics, another good approach is the “Semantic Analysis as it is an alternative to the deception of cues”. This approach increases the extent of the “n-gram plus syntax of the model by compatibility features of the incorporating profile” (Allen et al. 2020). The intention is here when there is no reference before the data is represented the analysis process may represent enough argument in their works to falsify the data. This process also has some limitations they are the capability for detection of alimentation of the attributes and descriptors is dependent upon the sufficient number of profiles of mined content (Pennycook et al. 2021).

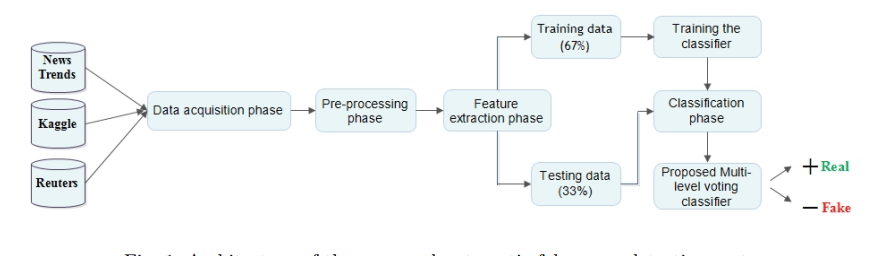

2.7. Implementation of Fake news detection using machine learning

There is an increase in the use of the internet in the current times and information can be exchanged among users through the internet. The use of social media has increased manifold in recent times and users can post comments, share pictures and news, and express their views on current events.

There is a possibility for the spread of “fake news through the internet and social media” sites and this can cause a lot of confusion among users (Jones-Jang et al. 2021). Fake news is spread among the users of social media websites to malign the public image of a person or an organization. Machine learning is a branch of study that is being applied to perform real-time analytics to identify the fake news that is spread using the internet.

2.7.1. Machine Learning

Machine Learning refers to the process of training a computer with data to observe patterns and trends in the data. The required solutions can be obtained from the patterns that have been observed in the dataset by the machine that has been trained to do so. In short machine learning is a technique of training the computer to perform tasks and the machine learns from the experiences gathered by repetitions of the task to perform the task more accurately every time the machine performs the tasks (Sharma et al. 2019). The machine is trained using a dataset and the data plays a central role in machine learning.

“There are different types of machine learning which are called supervised machine learning” and “unsupervised machine learning”. “Supervised machine Learning” refers to the tasks performed using a labeled dataset while “Unsupervised machine learning” refers to the task that is performed using a dataset that is not labeled. Machine learning can be performed using various kinds of algorithms and these algorithms are either “supervised machine learning” algorithms or “unsupervised machine learning” algorithms. Machine learning begins with the collection of data from a reliable data source and the quality of the data determines the type of solution that is obtained from the analysis (Ajao et al. 2018). The data is scrutinized for error as the data is raw and the errors are removed from the data. The data is checked for anomalies and inconsistencies and the missing values in the dataset need to be replaced. The process of cleaning the dataset from errors and inconsistencies is called data pre-processing and this is a vital step in the machine learning process.

After the pre-processing of the dataset, the data is ready for further analysis using the different machine learning algorithms. The “dataset is divided into training data and testing data and the machine is trained with the training data”. The algorithms are then applied to the testing data to obtain the desired results from the dataset after the algorithms have been trained using the training data. The users can access news present on the online platforms and they are beneficial for the users (Bataille et al. 2022). Cybercriminals use these online platforms to spread fake news and confuse users. Different machine learning algorithms are used for detecting fake news on online platforms and the identification of fake news is a big challenge.

2.7.2. NLP and Sentiment Analysis

“Natural language processing is used for the detection of fake news on online platforms” and it is the combination of speech generation and understanding the speech and it is used for detecting activities done using different languages. A researcher proposed a system for extracting actions from languages like Dutch, English, and others by using “Named Entity Recognition”, “Emotion Analyzer and Detection”, “Parts of Speech taggers” and “Semantic Role Labelling”. Sentiment analysis can be used to identify the opinions and emotions of the users on social media websites through which cybercriminals attempt to spread fake news. Sentiment models database, Glossary of meaning, and other components are used for the sentiment analysis of the opinions of the users on social media.

2.7.3. Supervised ML algorithms

Machine Learning classification can be used for achieving accurate outcomes using software where the software is not fully reprogrammed. Decision tree classifiers are used for the “detection of fake news on social media and it works based on a structure” that is similar to a flow chart (Bhatt et al. 2018). The nodes of a decision tree describe a condition or a test of an attribute and then the nodes are branched based on the results of the test conditions. Similarly, there are other classifiers like “Random Forest”, “Support Vector Machine”, “Naive Bayes”, “k-Nearest Neighbours”, and other such algorithms. Some of the “machine learning algorithms can be combined for the detection of fake news” and the primary aim when planning “paradigm detection systems” is to achieve the highest taxonomic performance. One ML classifier may perform best among all the ML algorithms that have been combined and that classifier is given added emphasis (de Oliveira et al. 2021). The accuracy of using a combination of ML algorithms instead of one single algorithm is higher and it is preferred in detecting fake news.

“Machine learning plays a key role in the detection of fake news on social media websites and its use is becoming increasingly popular”. A lot of research is taking place in the area of machine learning for application in various fields including “sentiment analysis and the detection of fake news”. The accuracy of ML algorithms with “respect to fake news detection on online platforms is found to be usually very high”. The data plays a very important role in the analysis since the quality of the results obtained depends on the quality of the dataset and how accurate the dataset is about the area of research.

2.8. Related Work

A lot of research is taking place to distinguish fake news from authentic news published on various websites on the internet. Cybercriminals try to harm the reputation of individuals or organizations by spreading fake news on the internet and if not detected early the news can spread among people very quickly whose opinions might be influenced by reading the fake news on the website (de Souza et al. 2020). Fake news can be classified as fabrications, hoaxes, and satire. The fabrications consist of news on events that are not taking place or celebrity gossip. The hoaxes consist of spreading “false news on social media” expecting to attract the attention of news channels and websites. The satire consists of news that is the false version of genuine news and is humorous but the news articles are full of irony.

“A corpus of satire news” has been built along with genuine news in four areas science, civics, soft news, and business. There have been several articles that have been studied which include 240 news articles. Classification has been done using feature sets that consist of punctuation, grammar, and absurdity. A researcher suggested a stylometric method for detecting fake news and authentic news among the articles checked. Some of the stylometric features include “character and stop word n-grams”, indices of readability along with features like the average word count in a paragraph and external links (Deepak and Chitturi, 2020). The dataset that has been used for the detection of news articles consists of 1627 articles and they were obtained from the Buzzfeed dataset which also includes 299 fake articles. The stylometric approach was unable to “distinguish the fake news articles from the authentic news articles”.

Research has also been done on the detection of deceptive content and has been explored in the domains namely “consumer review articles”, forums, advertisements provided online crowdfunding platforms, and online dating. Self-reference, negative or positive words are used to distinguish the liars from the true callers. There have been other researches that have used analysis of the “number of words, self-references, sentences, and temporal and spatial information” related to deceptive content. Some of the other features that have been investigated about fake new identification include diversity, non-immediacy, and informality to detect fake behaviors.

2.9. Methods based on Intervention

It is required to look for techniques that can be used for interpreting and updating the selection of actions for dealing with fake news depending on “real-time content propagation dynamics”.

2.9.1. Strategies for mitigation

Fake news needs to be detected early so that it can be prevented from getting spread to a large number of people. There are several good models for representing information diffusion on social media websites and some of them include the Linear Threshold and Independent Cascade model and there are “point process models” like “The Hawkes Process model” (Gaozhao, 2021). The diffusion method begins with seed nodes which are activated at the first timestep. At the time step a node named u is activated in a diffusion process and an activation process attempt is made on a separate inactive neighbor called v. The probability of the success of the activation is computed and once a node is activated it continues in that state through the remainder of the diffusion process. Point process models are different from the activation process and an intensity function is described in point process models.

A researcher suggested a decontamination strategy for users who are exposed to deceitful news. Either the Independent Cascade or Linear Threshold model is used to model the diffusion process. The most suitable set of seed users from whom the diffusion process of true news can begin is done using a greedy algorithm as a result of which a certain portion of the users are decontaminated from the fake news (Gawronski, 2021). One of the disadvantages of this approach is that it is a measure that is used for the correction of the situation once the fake news is spread.

Another approach to detecting fake news among all the news given on social media websites is called Competing cascades and it is an intervention strategy where authentic news is introduced in the social media platform among the fake news that is present. A researcher created an “influence blocking maximization” for determining an optimal strategy to distinguish true news from fake news by choosing k-seed users to reduce the number of users who get activated by fake news rather than authentic news. This model assumes that if the user is activated by either the authentic news or the false news, the user remains activated throughout the cascade. There are two models for the diffusion process since the power of true news might be different from the false news that is spread on online platforms (Hosseinimotlagh et al. 2018). There is no external moderation after the seed users of the true news cascade are chosen at the start of the process concerning fake news and then both the news cascades are competitively treated. The model does not separate the users who are exposed to fake news and those users who reshare the news

2.9.2. Identification strategies

By monitoring the network, the fake news items on the websites can be checked and detected and prevented from getting spread on a large scale. “Computer-aided social media accounts” are used for filtering fake news from the authentic news that is spread on the internet. The network is monitored for detecting the transmission of suspicious news and then the process of the spreading of the news can be prevented as early as possible which can prevent the negative impact of the spread of false news. Network topologies need to be checked for monitoring false news on the internet (Jadhav et al. 2019). The cost of performing network monitoring on a large scale may not be feasible for implementation.

User behavior of the people accessing the websites containing fake news can be modeled using various methods and the patterns and tendencies of the use of social media websites by computer users are studied to determine the probability of exposure to fake news. The intervention methods are comparatively more cumbersome to implement and is usually avoided in practical contexts. The impact of fake news on the internet can be detrimental to the general opinion of the common people in society and can affect the opinions of the people. Fake news should be prevented from getting spread at all costs.

2.10. Summary

The research and analysis of the project are the identification of the face news using real-time analytics gives information about fake news and its various types of categories, and its impacts on the lifestyle of the people. The analysis also gives information about the fake news detection tools that were used previously and also about the latest technologies that have helped to evolve the fake news detection tool. The advanced detection tool also gives accuracy and reduces the time and effort that are required previously. With the help of the analysis, it also helps to get knowledge about the latest technologies like machine learning and about the concept of real-time analytics which help to advance their knowledge and upgrade their skills such that they can do further development on the project.

The development of the project results in more comfort in finding the fake news more accurately and takes very little time so that it becomes impossible for the fake news spreaders to spread the fake news. The challenges of implementing the fake news detection are also been known by the analysis which can help to take out the solutions for the challenges so that no problems arise during the implementation of the false news detection tools. The further advancements that can be done on the tools are that the latest technologies can be used to upgrade the tools so that they can give more features during the detection time. The features that can be added with the help of Artificial Intelligence are the detection of the sensitive part of the news can be detected and can be removed with the help of machine learning so that it reduces the impact and chaos that can happen by the spreading of fake news. This is some of the information that has been collected during the analysis of the project is the implementation of fake news detection using real-time analytics.

References

Ajao, O., Bhowmik, D. and Zargari, S., 2018, July. Fake news identification on Twitter with hybrid cnn and rnn models. In Proceedings of the 9th International Conference on Social Media and Society (pp. 226-230).

Batailler, C., Brannon, S.M., Teas, P.E. and Gawronski, B., 2022. A signal detection approach to understanding the identification of fake news. Perspectives on Psychological Science, 17(1), pp.78-98.

Bhatt, G., Sharma, A., Sharma, S., Nagpal, A., Raman, B. and Mittal, A., 2018, April. Combining neural, statistical, and external features for fake news stance identification. In Companion Proceedings of the The Web Conference 2018 (pp. 1353-1357).

Bondielli, A. and Marcelloni, F., 2019. A survey on fake news and rumor detection techniques. Information Sciences, 497, pp.38-55.

de Oliveira, N.R., Pisa, P.S., Lopez, M.A., de Medeiros, D.S.V. and Mattos, D.M., 2021. Identifying fake news on social networks based on natural language processing: trends and challenges. Information, 12(1), p.38.

de Souza, J.V., Gomes Jr, J., Souza Filho, F.M.D., Oliveira Julio, A.M.D. and de Souza, J.F., 2020. A systematic mapping on automatic classification of fake news in social media. Social Network Analysis and Mining, 10(1), pp.1-21.

Deepak, S. and Chitturi, B., 2020. A deep neural approach to fake news identification. Procedia Computer Science, 167, pp.2236-2243.

Gangireddy, S.C.R., Long, C. and Chakraborty, T., 2020, July. Unsupervised fake news detection: A graph-based approach. In Proceedings of the 31st ACM conference on hypertext and social media (pp. 75-83).

Gaozhao, D., 2021. Flagging fake news on social media: An experimental study of media consumers' identification of fake news. Government Information Quarterly, 38(3), p.101591.

Gawronski, B., 2021. Partisan bias in the identification of fake news. Trends in Cognitive Sciences, 25(9), pp.723-724.

Hosseinimotlagh, S. and Papalexakis, E.E., 2018, February. Unsupervised content-based identification of fake news articles with tensor decomposition ensembles. In Proceedings of the Workshop on Misinformation and Misbehavior Mining on the Web (MIS2).

Jadhav, S.S. and Thepade, S.D., 2019. Fake news identification and classification using DSSM and improved recurrent neural network classifier. Applied Artificial Intelligence, 33(12), pp.1058-1068.

Jones-Jang, S.M., Mortensen, T. and Liu, J., 2021. Does media literacy help the identification of fake news? Information literacy helps, but other literacies don’t. American Behavioral Scientist, 65(2), pp.371-388.

Kula, S., Choraś, M., Kozik, R., Ksieniewicz, P. and Woźniak, M., 2020, June. Sentiment analysis for fake news detection using neural networks. In International conference on computational science (pp. 653-666). Springer, Cham.

Meel, P. and Vishwakarma, D.K., 2020. Fake news, rumor, information pollution in social media and web: A contemporary survey of state-of-the-art, challenges and opportunities. Expert Systems with Applications, 153, p.112986.

Meel, P. and Vishwakarma, D.K., 2020. Fake news, rumor, information pollution in social media and web: A contemporary survey of state-of-the-art, challenges and opportunities. Expert Systems with Applications, 153, p.112986.

Meinert, J., Mirbabaie, M., Dungs, S. and Aker, A., 2018, July. Is it fake?–Towards an understanding of fake news in social media communication. In International Conference on Social Computing and Social Media (pp. 484-497). Springer, Cham.

Monti, F., Frasca, F., Eynard, D., Mannion, D. and Bronstein, M.M., 2019. Fake news detection on social media using geometric deep learning. arXiv preprint arXiv:1902.06673.

Paka, W.S., Bansal, R., Kaushik, A., Sengupta, S. and Chakraborty, T., 2021. Cross-SEAN: A cross-stitch semi-supervised neural attention model for COVID-19 fake news detection. Applied Soft Computing, 107, p.107393.

Paka, W.S., Bansal, R., Kaushik, A., Sengupta, S. and Chakraborty, T., 2021. Cross-SEAN: A cross-stitch semi-supervised neural attention model for COVID-19 fake news detection. Applied Soft Computing, 107, p.107393.

Pulido, C.M., Ruiz-Eugenio, L., Redondo-Sama, G. and Villarejo-Carballido, B., 2020. A new application of social impact in social media for overcoming fake news in health. International journal of environmental research and public health, 17(7), p.2430.

Pulido, C.M., Ruiz-Eugenio, L., Redondo-Sama, G. and Villarejo-Carballido, B., 2020. A new application of social impact in social media for overcoming fake news in health. International journal of environmental research and public health, 17(7), p.2430.

Pulido, C.M., Ruiz-Eugenio, L., Redondo-Sama, G. and Villarejo-Carballido, B., 2020. A new application of social impact in social media for overcoming fake news in health. International journal of environmental research and public health, 17(7), p.2430.

Qayyum, A., Qadir, J., Janjua, M.U. and Sher, F., 2019. Using blockchain to rein in the new post-truth world and check the spread of fake news. IT Professional, 21(4), pp.16-24.

Qayyum, A., Qadir, J., Janjua, M.U. and Sher, F., 2019. Using blockchain to rein in the new post-truth world and check the spread of fake news. IT Professional, 21(4), pp.16-24.

Sharma, K., Qian, F., Jiang, H., Ruchansky, N., Zhang, M. and Liu, Y., 2019. Combating fake news: A survey on identification and mitigation techniques. ACM Transactions on Intelligent Systems and Technology (TIST), 10(3), pp.1-42.

Sharma, S. and Sharma, D.K., 2019, November. Fake News Detection: A long way to go. In 2019 4th International Conference on Information Systems and Computer Networks (ISCON) (pp. 816-821). IEEE.

Sharma, S. and Sharma, D.K., 2019, November. Fake News Detection: A long way to go. In 2019 4th International Conference on Information Systems and Computer Networks (ISCON) (pp. 816-821). IEEE.

Shu, K., Mahudeswaran, D. and Liu, H., 2019. FakeNewsTracker: a tool for fake news collection, detection, and visualization. Computational and Mathematical Organization Theory, 25(1), pp.60-71.

Shu, K., Mahudeswaran, D. and Liu, H., 2019. FakeNewsTracker: a tool for fake news collection, detection, and visualization. Computational and Mathematical Organization Theory, 25(1), pp.60-71.

Singh, V.K., Ghosh, I. and Sonagara, D., 2021. Detecting fake news stories via multimodal analysis. Journal of the Association for Information Science and Technology, 72(1), pp.3-17.

Zhang, C., Gupta, A., Kauten, C., Deokar, A.V. and Qin, X., 2019. Detecting fake news for reducing misinformation risks using analytics approaches. European Journal of Operational Research, 279(3), pp.1036-1052.

Zhang, C., Gupta, A., Kauten, C., Deokar, A.V. and Qin, X., 2019. Detecting fake news for reducing misinformation risks using analytics approaches. European Journal of Operational Research, 279(3), pp.1036-1052.

Zhang, C., Gupta, A., Kauten, C., Deokar, A.V. and Qin, X., 2019. Detecting fake news for reducing misinformation risks using analytics approaches. European Journal of Operational Research, 279(3), pp.1036-1052.