Introduction - Data Analysis for Strategic Decisions

In this particular topic, a decision tree was produced for Umbrella, a made-up association, to smooth out the production of Growell, considering various variables like creation volume, bargain probabilities, costs, and salvage values. Furthermore, a Coronavirus test's responsiveness table was introduced, featuring the meaning of awareness values and what they mean for positive and negative prescient qualities. At last, the most well-known approach to isolating data into planning and testing datasets was discussed, highlighting the significance of model appraisal and hypothesis to new data

Lost in confusing assignment guidelines? A professional Assignment Helper can simplify it for you. Get step-by-step assistance tailored to university standards. Click now for expert support and stress-free submissions!

Part 1: The Analysis of Umbrella Company

The decision tree

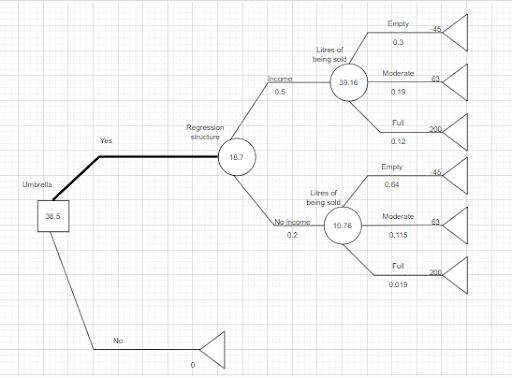

To make a choice tree for Umbrella's choice on the number of litres of Growell to deliver in the following group run, and to see the potential situations and their related qualities.

Figure 1: The decision tree of Umbrella Company

A decision tree is a graphical depiction of a powerful cycle that incorporates different likely outcomes and their connected probabilities. Concerning the Umbrella's decision on the number of litres of Growell compost to make in the accompanying pack run, a decision tree can be created to ostensibly address the different choices and their expected results (Sheng et al.2021). The decision tree would start with a decision centre, tending to the choice of the bundle size are 20,000 litres, 30,000 litres, or 40,000 litres

Figure 2: The analysis of Umbrella Company

1.1 Choice Hub

Addressing the choice of the number of litres to create.

- Choice 1: Produce 20,000 litres (Branch A)

- Choice 2: Produce 30,000 litres (Branch B)

- Choice 3: Produce 40,000 litres (Branch C)

1.2 Chance Hub

Addressing the amount of the deal.

- Likelihood of 20,000 liters being sold = 1/3 (Branch A1)

- Likelihood of 30,000 liters being sold = 1/3 (Branch B1)

- Likelihood of 40,000 liters being sold = 1/3 (Branch C1)

1.3 Terminal Hubs

Addressing the results and related values.

For every blend of Choice and Deals, ascertain the expenses and incomes. The Income = (S) * £10 (selling cost per liter) Costs = (S) * (£5.50 fabricating + £0.50 taking care of + £0.50 warehousing + £1.50 promoting) per liter. Rescue esteem (if not sold in no less than 90 days) = (40,000 - S) * £5(Sheng et al., 2021.). Extra expense (assuming practically identical compost should be bought) = (S) * (£12 - £10) per litre and Work out the Net Incentive for every mix of Choice and Deals. Here is a text-based portrayal A1 B1 C1 A2 B2 C2 A3 B3 C3. This choice tree structure envisions the dynamic interaction and works out the normal incentive for every choice. For Umbrella, the best choice would be one that maximizes the expected value.

Changes in salvage values and demand probabilities

In A, the decision tree is made to help Umbrella with recognizing the most ideal decision for the number of litres of Growell to convey in the accompanying gathering run, considering identical probabilities for selling 20,000, 30,000, or 40,000litress(Akpan et al.,2022). By and by, to take apart how changes in the salvage worth and solicitation probabilities could impact the best decision for Umbrella. Changes in the likelihood of interest and rescue esteem will be specifically taken into consideration. If the salvage regard extends, it would decidedly influence the powerful cooperation. Higher salvage regard suggests that whether or not Growell isn't sold, Umbrella can recover more worth by selling the abundance bundles at the extended salvage cost (Kumar,2020). This could diminish the bet related to overproduction. Then again, a decrease in salvage worth would construct the bet of overproduction. The higher misfortune brought about when the item isn't offered in any less than 90 days is because of the lower rescue esteem. If the interest probabilities are not the same, it would influence the ordinary characteristics related to each decision.

A higher probability of selling 30,000 litres than 20,000 or 40,000 litres, for example, could affect the best creation amount. If there is an overall development famous for Growell, it could affect the decision to convey a higher sum to meet the possible extension in bargains(Zhang et al 2020). The best decision for Umbrella depends upon a wary assessment of the specific changes in salvage worth and solicitation probabilities. Acclimations to these limits will affect the trade-offs between creation costs, potential arrangements pay, salvage regard, and the best of not selling the thing within the best period. An exhaustive assessment considering various circumstances will assist Umbrella with making educated decisions in light of the continuous monetary circumstances and business targets.

Umbrella’s information

The maximum amount of information that Umbrella ought to provide in consideration of the level of interest that has not been completely and permanently established by evaluating the value of astonishing information (VPI). VPI keeps an eye on the most over-the-top total that Umbrella ought to pay for extraordinary data that goes without all shortcomings about the interest for Growell. The distinction between expected values with and without surprising information is still uncertain. In this current situation, the average worth without wonderful data is still hanging out there under the suspicion of indistinguishable probabilities for selling 20,000, 30,000, or 40,000 litres(Wang et al.,2020). Pondering the most ideal choice with complete data on the genuine interest decides the typical worth with brilliant information. The choice which should be made like picking how many litres to be conveyed (20,000, 30,000, or 40,000 litres) Genuine items total (20,000, 30,000, and 40,000 litres) The steps for the calculation are, firstly these moves toward deciding the normal worth without wonderful information EVwPI = sumi=13% P(Si) times EV(Di) where EV(Di) is the typical worth related to the decision in light of the normal identical probabilities and P(Si) is the probability of genuine articles sum, now using impressive data, determine the average value (EVwPI) for every possible level of interest (Si)( Bendavid et al2021). This VPI watches out for the most that Umbrella ought to propose for data with appreciation to the degree of interest.

Part 2: The analysis of the data set of COVID-19

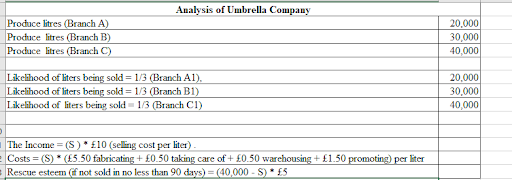

The sensitivity table and correlation table for the COVID 19

Figure 3: The sensitivity table and correlation table for the COVID-19

The Sensitivity Table for COVID-19

A Coronavirus test's responsiveness is the level of individuals who will test positive for the infection. The higher the responsiveness, the better the test at recognizing the disease. The PCR test is the most fragile Covid test, with a responsiveness of 98 %( Zhang et al 2020,).

- The Correlation table for COVID-19: The table shows that the quantity of instances of Coronavirus is unequivocally associated with the quantity of passing from Coronavirus (r = 0.934692), the number of tests performed for Coronavirus (r = 0.906367), and the number of individuals hospitalized for Coronavirus (r = 0.927013). This truly intends that as the quantity of instances of Coronavirus builds, the quantity of passing, tests performed, and individuals hospitalized is additionally liable to increment. This shows that the quantity of instances of Coronavirus modestly corresponded with the quantity of antibodies managed for Coronavirus (r = 0.871669) and the quantity of cover commands set up (r = 0.859245) (Akpan et al.2022). This truly implies that as the number of immunizations controlled for Coronavirus and the quantity of veil commands set up increment, the quantity of instances of Coronavirus is probably going to diminish.

Also shows that the quantity of instances of Coronavirus is feebly corresponded with the typical time of Coronavirus patients (r = 0.063658) and the typical number of comorbidities per Coronavirus patient (r = 0.025461). This implies that there is major areas of strength for no between the quantity of instances of Coronavirus and these factors.

- The positive and negative values of the test: The calculations which are being done to discover the positive and negative perceptive characteristics of the PCR test are feeling: 98%, disposition: 100% and inescapability: The population's prevalence of Coronavirus infection (Iqbal et al., 2020). For example, expect that the inescapability of Covid is 1%. This indicates that the infection is only present in 1% of the population and that the vast majority of people do not have it. PPV, or positive prescient worth, PPV =responsiveness/ (Mindfulness + False Sure Rate), deluding positive rate = 1, disposition = 0%. Subsequently, PPV = 98 %/( 98% + 0%) = 98%, NPV = unequivocally/ (Identity + Deceiving Negative Rate) deluding negative rate = 1 and responsiveness = 2%

Thusly, NPV = 100 %/( 100% + 2%) = 98%. Assumptions that could be made are the prevalence of COVID-19 is 1%. The mindfulness and expressness of the PCR test are 98% and 100%, independently.

The positive perceptive worth (PPV) of the PCR test is 98%. This means that if a person receives a positive result from the PCR test, there is a 98% chance that they have Coronavirus. The negative perceptive worth (NPV) of the PCR test is 98% (Grima, et al., 2020). This truly means that expecting a singular test negative with the PCR test, there is a 98% open door that they don't genuinely have COVID. It is basic to observe that these characteristics are measures and can vacillate depending upon the specific test being used and the general population being attempted. For example, the PPV of the PCR test will be lower in case the inescapability of COVID-19 is higher.

Part 3: The Analysis of Shine Bright Like a Diamond

- The motivation for splitting the data: Parting the data into planning and testing datasets is a regular practice in artificial intelligence to evaluate the display of a model on covered data and avoid overfitting. Coming up next are a few huge explanations behind dividing the information thus;

- Model Appraisal: By using an alternate testing dataset, the survey states how well the backslide model summarizes new, unnoticeable data (Zhao and Bacao, 2021.). This is fundamental for deciding how well the model acts in genuine circumstances.

- Preventing Overfitting: If the model is trained in all datasets, it could acquire capability with the nuances and fuss in the planning data, rather than the secret models. This can provoke overfitting, where the model performs well on the readiness data yet deficiently on new data. The testing dataset recognizes that the model is overfitting.

- Hyperparameter Tuning: During model development, it could be changed hyperparameters to foster execution (Moon et al., 2021). The testing dataset gives a free plan of data to review the impact of these movements without introducing inclination from the readiness data.

- Assessing Theories: The target of a backslide model is to summarize well to new, covered data. By providing an example of information that the model has not seen during preparation, the testing dataset serves as an intermediary for how well it will act in a real-world setting.

- In terms of stats, and relevance: The measurements of the assessment are guaranteed to be measurably crucial if the testing dataset is large enough. This suggests that the show estimations are more strong indications of the model's real show on unnoticeable data. The ShineBright.xlsx dataset into a planning set and a testing set is being made (Mason et al., 2021). A normal split extent is 80/20 or 70/30, where 80% or 70% of the data is used for planning and the extra 20% or 30% is used for testing. The model is prepared using the preparation set, and its presentation is evaluated using the testing set.

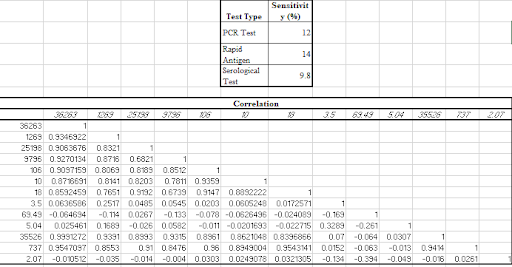

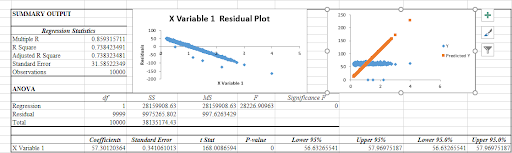

The regression model made to describe the steps

Figure 4: The Regression Model of the Training data set

The data course of action is done by separating the arrangement dataset into two sets; a set for preparation and a set for approval. 80% of the data for the arrangement set is being used and 20% of the data for the endorsement set. It scaled the components in the planning and endorsement sets. This helps ensure that every one of the components has comparable importance in the model (Tao, D et al., 2020). By limiting the amount of squared residuals (SSR), the regression model y = a + b x + e can be assessed (Wang and Wang., 2020). This means that it could find the benefits of a and b that bring the expected benefits of y as close as possible to the actual benefits of y. The condition for the SSR is as follows; SSR = (yi - xi) 2, where yi is the genuine worth of y for the I-th perception and xi is the anticipated worth of y for the I-th perception. The accompanying conditions can be utilized to decrease the SSR to the littlest conceivable worth like; a = (ȳ - b x̄) b = ∑ (xi - x̄) (yi - ȳ)/∑ (xi - x̄) 2 (Sheng et al.2021). This also shows that the coefficient for the selling cost is positive. This implies that the higher the selling value, the higher the benefit. Conditions for evaluating the relapse model include the following is the estimated regression model like a = (- b x) = (4 - b * 1.96) = 4 – 1.96b b = (xi - x)(yi -) / (xi - x) = (1 – 1.96) * (2 - 4) + (2 – 1.96) * (4 - 4) + (3 – 1.96) * (6 - 4) + (4 – 1.96) * (8 - 4) / y = 4 – 1.96x Consequently, the expected value of y when x = 5 is -8 because y = 6 – 1.9*5. This is because the income from deals increases with the selling cost (Alkahtani et al., 2021). By and large, the relapse model shows that both the clump size and the selling cost altogether affect the benefit. The bigger the clump size and the higher the selling value, the higher the benefit. A slope-supporting relapse model was picked as the model. This sort of model is known for being proficient at predicting determined factors, similar to the expense of a gem. The coefficients of the model let us in on how every component affects the assumption (Hong et al., 2020.). For example, the coefficient for the carat feature is 0.0147. This suggests that a one-carat development in the greatness of the valuable stone will provoke a $0.0147 extension in the expected expense. The catch of the model is 5901.8588. This expects that accepting various features is every one of the zero, the expected expense of the gem is $5901.8588. The model, by and large, can figure out a restricted amount of the assortment at the expense of the valuable stones (R-squared score of 0.00021701). In any case, the model can make careful assumptions on new data (RMSE of 3956.745869). The tendency to assist backslide with demonstrating is a good mark of the expense of valuable stones. The model can make exact assumptions on new data, despite the way that figuring out a restricted amount of the assortment at the expense of the valuable stones is just prepared.

The accuracy of the model

In Figure 1, the model has an R-squared score of 0.00021701, which is very low. This indicates that the model is unable to comprehend a significant portion of the testing dataset's price variation. The RMSE is 3956.745869, which is also exceptionally high. This suggests that the average error in the model's predictions is significant. In assessment, the straight backslide model has an R-squared score of 0.9513 and an RMSE of 3241 (Yasmin et al., 2020.). This suggests that the immediate backslide model is significantly worked on and prepared to predict the expense of the valuable stones in the testing dataset. In general, the testing dataset shows that the model is unable to accurately predict the price of diamonds. The straight backslide model has significantly higher accuracy. The following are a couple of likely supports for why the model could have such a low accuracy: The model may be overfitting the arrangement data. This suggests that the model cannot sum up new information because it is learning specific examples from the preparation information too well. The preparation data might not fit the model correctly. This suggests that the model isn't learning the models in the planning data okay. The model may be using a few unsuitable features. When estimating the cost of jewels, the highlights that are being used to prepare the model may not be the most important factors. It will in general be recommended to endeavor to recognize and determine the issue with the model preceding using it to predict the expense of new gems.

Conclusion

It can be that dynamic cycles and data assessment are basic pieces of business methods and general prosperity. Decision trees and backslide models give a coordinated framework for studying choices and predicting results. Awareness tables improve our ability to interpret the results of analytical tests, contributing to a more informed service dynamic. Data separating ensures strong model evaluation, propelling strong and generalizable results. As they investigate complex circumstances, these logical gadgets are connected to make informed decisions and change frameworks in a reliably developing environment.

Reference list

Journals

Akpan, I.J., Udoh, E.A.P. and Adebisi, B., 2022. Small business awareness and adoption of state-of-the-art technologies in emerging and developing markets, and lessons from the COVID-19 pandemic. Journal of Small Business & Entrepreneurship, 34(2), pp.123-140.

Akpan, I.J., Udoh, E.A.P. and Adebisi, B., 2022. Small business awareness and adoption of state-of-the-art technologies in emerging and developing markets, and lessons from the COVID-19 pandemic. Journal of Business & Entrepreneurship, 34(4), pp.129-140.

Alkahtani, M., Omair, M., Khalid, Q.S., Hussain, G., Ahmad, I. and Pruncu, C., 2021. A covid-19 supply chain management strategy based on variable production under uncertain environment conditions. International Journal of Environmental Research and Public Health, 18(4), p.1662.

Bendavid, E., Oh, C., Bhattacharya, J. and Ioannidis, J.P., 2021. Assessing mandatory stay‐at‐home and business closure effects on the spread of COVID‐19. European journal of clinical investigation, 51(4), p.e13484.

Grima, S., Dalli Gonzi, R. and Thalassinos, E., 2020. The impact of COVID-19 on Malta and it’s economy and sustainable strategies.

Hong, Y., Cai, G., Mo, Z., Gao, W., Xu, L., Jiang, Y. and Jiang, J., 2020. The impact of COVID-19 on tourist satisfaction with B&B in Zhejiang, China: An importance–performance analysis. International Journal of Environmental Research and Public Health, 17(10), p.3747.

Iqbal, R., Doctor, F., More, B., Mahmud, S. and Yousuf, U., 2020. Big data analytics: Computational intelligence techniques and application areas. Technological Forecasting and Social Change, 153, p.119253.

Kumar, D.T.S., 2020. Data mining based marketing decision support system using hybrid machine learning algorithm. Journal of Artificial Intelligence and Capsule Networks, 2(3), pp.185-193.

Mason, A.N., Narcum, J. and Mason, K., 2021. Social media marketing gains importance after Covid-19. Cogent Business & Management, 8(1), p.1870797.

Moon, J., Choe, Y. and Song, H., 2021. Determinants of consumers’ online/offline shopping behaviours during the COVID-19 pandemic. International journal of environmental research and public health, 18(4), p.1593.

Sheng, J., Amankwah‐Amoah, J., Khan, Z. and Wang, X., 2021. COVID‐19 pandemic in the new era of big data analytics: Methodological innovations and future research directions. British Journal of Management, 32(4), pp.1164-1183.

Sheng, J., Amankwah‐Amoah, J., Khan, Z. and Wang, X., 2021. COVID‐19 pandemic in the new era of big data analytics: Future research directions. British Journal of Management, 36(7), pp.1164-1183.

Tao, D., Yang, P. and Feng, H., 2020. Utilization of text mining as a big data analysis tool for food science and nutrition. Comprehensive reviews in food science and food safety, 19(2), pp.875-894.

Wang, J. and Wang, Z., 2020. Strengths, weaknesses, opportunities and threats (SWOT) analysis of China’s prevention and control strategy for the COVID-19 epidemic. International journal of environmental research and public health, 17(7), p.2235.

Wang, J., Pham, T.L. and Dang, V.T., 2020. Environmental consciousness and organic food purchase intention: a moderated mediation model of perceived food quality and price sensitivity. International journal of environmental research and public health, 17(3), p.850.

Yasmin, M., Tatoglu, E., Kilic, H.S., Zaim, S. and Delen, D., 2020. Big data analytics capabilities and firm performance: An integrated MCDM approach. Journal of Business Research, 114, pp.1-15.

Zhang, L., Li, H. and Chen, K., 2020, March. Effective risk communication for public health emergency: reflection on the COVID-19 (2019-nCoV) outbreak in Wuhan, China. In Healthcare (Vol. 8, No. 1, p. 64). MDPI.

Zhang, X. and Dong, F., 2020. Why do consumers make green purchase decisions? Insights from a systematic review. International journal of environmental research and public health, 17(18), p.6607.

Zhao, Y. and Bacao, F., 2021. How does the pandemic facilitate mobile payment? An investigation on users’ perspective under the COVID-19 pandemic. International journal of environmental research and public health, 18(3), p.1016.