Introduction: Image Classification Using Ml Algorithms

Image classification is a central issue in computer vision and learning which can be applied to automating, automobile driving, medical diagnosis, and areas of health among others. Although the studies have been recently shifted towards deep learning techniques especially convolutional neural networks (CNNs), the traditional machine learning algorithms still hold relevance to classification problems and are useful as baselines. This paper aims at comparing the outcomes of two typical ML technologies, the Decision Tree and the K-NN, in the context of image classification with consideration of the CIFAR-10 dataset. CIFAR-10 is composed of 60,000 32×32 color images in 10 classes which makes it highly dimensional and complex to be solved through feature space methods.

The following are the objectives of the present study:

- To decide which classifier is more reliable between Decision Tree classifier and KNN classifier, the two must first be used on pictures to classify them.

- To assess the effect of applying the PCA and reducing dimensionality in classification.

- To improve the performance of the classifier through tuning the hyperparameters.

- To quantify the performance of the approach using standard measures and determine the advantages and disadvantages in each case

- To further compare results we have in this work with those obtainable from other researchers in related literature

In this regard, this study sought to implement and test the classical machine learning models to check their efficiency in image classification tasks while attempting to make the models as simplistic as possible so as to illustrate the best use of machine learning and amidst advancing the exploration of deep learning models.

Reference materials and sample papers are provided to clarify assignment structure and key learning outcomes. Through our Assignment Help UK, guidance is reflected while maintaining originality and ethical academic practice. The Image Classification Using ML Algorithms Assignment Sample demonstrates practical application of machine learning concepts in computer vision. These materials are provided strictly for academic learning and reference purposes.

Related work

Image Classification Approaches

Classification of images took a different path in the last few decades, and many techniques were used such as machine learning techniques, or deep learning. Earlier methods used in image classification are the methods that involve feature extraction characteristic from images, then followed by basic classification methods such as Support Vector Machines (SVM), Decision Trees, k-nearest neighbors among others [1]. They were shown to have comparatively lower classification accuracy than modern deep learning methods but also suggested to be enlightening when it comes to the principles of image classification and suitable when computational power is scarce.

Decision Trees for Image Classification

Decision trees have been used in different ways in image classification to different extents of success. Experts proved that Decision Trees are efficient when used for simple classification tasks but ineffective in case with high-dimensional data like natural images because of huge variance. In their study, they sourced a record of 28% on CIFAR-10 by using Decision Trees with the help of PCA for dimensionality reduction. It also attempted to study a few Ensemble methods that originated from the Decision Tree Route – the Random Forest algorithms, which more consistently increases the overall accuracy to a range of 40% achieved from the CIFAR-10 dataset with the use of feature selection techniques [2]. Nevertheless, they are not as efficient as the methods used in contemporary deep learning.

K-Nearest Neighbors for Image Classification

KNN has been used in classification of images in several cases, studies and researches. When tested on the CIFAR-10 dataset, KNN had an accuracy of 35% respectively with the use of Euclidean distance and optimal k-values produced from cross validation. They highlighted in their studies that, when it comes to image data, KNN is slightly superior to Decision Trees since the latter looks for complex decision boundaries. However, it utilized a weighted distance based approach and managed to enhance the KNN on CIFAR-10 to an effective accuracy of 42% [3]. They showed that distance weighting approaches improve KNN efficiency especially in situations where the features of the images are highly scattered.

CIFAR-10 Classification Benchmarks

CIFAR-10 is now the most often used dataset for evaluating image classifiers. According to the authors of the paper that introduced CIFAR-10, a neural network that was specially designed for that dataset was able to get 84.3% test accuracy. Recent developments in utilizing CNNs that are more advanced brought the accuracy rate above 98%. Nonetheless, conventional tangible learning methods offer a diminished result in CIFAR-10 [4]. On CIFAR-10, many conventional methods were evaluated in which it was observed that Decision Trees achieved 31 % accuracy, KNN was 40 %, SVM was 45 %, and Naive Bayes was only 38 %.

Hyperparameter Optimization

Hyperparameter tuning is known to have a direct relationship with the classifier’s performance. Various pieces of research proved that when hyperparameters are tuned systematically using approaches as Grid Search, Random Search, the accuracy increases by as much as 5-10% to traditional machine learning models. For Decision Trees it noted that in order to increase the CIFAR-10 classification, one can find the right values of the max depth and samples per split parameter to increase up to 8% in return from the default [5]. Some of them also proved that by addressing the choice of the number of neighbors and the weight determination in KNN classifiers, there was an improvement of 7% in the classification of images.

Methods and material

Dataset

The CIFAR-10 dataset was employed in this work because it is one of the most popular datasets used in pattern recognition. It includes 60,000 32×32, color images belonging to the 10 classes of objects such as airplanes, automobiles, birds, cats, deers, dogs, frogs, horses, ships, and trucks. It is balanced in terms of classes where each class is represented with 6000 images, and is sub-sampled into the training set of 50,000 images and a test set of 10,000 images [6]. These reasons are as follows; CIFAR-10 is a moderately difficult dataset and has a balanced class distribution hence, it does not have any bias; a number of researchers have used CIFAR-10 for experiments; Last and most importantly, the images in CIFAR-10 are small (32×32) in size, therefore would not require enormous computational power despite its complexity.

Data Preprocessing

A few pre-processing methods were applied on the CIFAR-10 dataset before categorization was done. The naturally three-channels 32×32×3 (3,072 features) treatment of the images was flattened in order to make it compatible for feed forward, naïve, traditional machine learning algorithms in the form of a feature matrix. Specifically, to return the high dimension feature space to the manageable scope, Principal Component Analysis (PCA) was used and set at 100 components. This step was very important in order to minimize computational burden, fighting the curse of dimensionality, removal of features that are irrelevant or dominated with noises and to enhance generalization of classifiers. The number of PCA components (100) was chosen in accordance with linear explorations made pre-cooling for the aim of deciding with the number of valuable components helpful for CIFAR-10 which was specified as 100 [7]. The test of Kaiser is also conducted to ensure that the chosen number of components accounted for an adequate amount of variability in the data by calculating the cumulative explained variance ratio.

Machine Learning Pipeline

The model training was conducted through a defined, multi step machine learning process. First, the CIFAR-10 dataset was imported by using TensorFlow’s in-built function for CIFAR-10; the images were flattened from a shape of 3 dimensions to 1 dimension and the labels flattened as well. Exploratory Data Analysis on class distribution was done to check the balance of the splitting dataset and, further on, the variance explained by PCA was checked to insight into the information preservation. Feature extraction was done using PCA at 100 dimensions from the 3072 initial dimensions, the training and test data sets were reduced using the fitted PCA model. The steps to perform model selection were Decision Tree and KNN classifiers with the help of Grid Search using 3 fold cross-validation technique to find the right hyperparameter [8]. Last of all, using the test data, class labels were predicted and the standard accuracy and error rates were computed as well as the confusion matrices for further error analysis.

Classification Algorithms

There are two classifiers used in this study, and both were constructed and compared. Decision Tree Classifier logically divides the feature space into two sections by a single criterion to form a tree-like model where each terminal is associated with a prediction of class. The Decision Tree model’s hyperparameters were max_depth with the following values 10, 20, 30, and min_samples_split with the following values 2, 5, 10. These parameters were established to reduce model capacity and mitigate a well-known problem of Decision Trees especially when dealing with large datasets that are more of dimensions rather than samples. K-Nearest Neighbors Classifier works on the basis of a distance of a new instance from data samples and classes selected according to the majority of close neighbors with new instances in the feature space. There were two hyperparameters adjusted for the KNN model, namely n_neighbors (number of neighbors to be considered with values 3, 5 and 7), and weights (weighting function for the neighbors contribution, which can be either ‘uniform’ or ‘distance’ [9]. The effect that controls the smoothness of the decision boundary is embodied by the n_neighbors parameter while the weights parameter governs the effect of distance to a neighbor.

Evaluation Metrics

The performance of every single classifier was quantified with measurement terms. Accuracy was used to determine the percentage of trees out of all the images; the correct classification of an image was achieved on the image that belonged to the identified category. Precision offered a quantitative way of measuring the potential of the model to reduce the risks of false-positive outcomes, which is specific to classes. California is separate, as it is presented as an assessment of the model’s capacity to find all positive samples of a specific class. The F1-score stood for the harmonic average of the precision and recall values. To be precise, confusion matrices were developed in order to represent the prediction errors and the corresponding patterns. These metrics were selected with the intention of giving an evaluation of the performance of the classifiers, in gross and class wise [10]. Altogether these metrics could help not only to compare the performance of each classifier overall but also define what kind of images were classified with higher or lower accuracy.

Results and Discussion

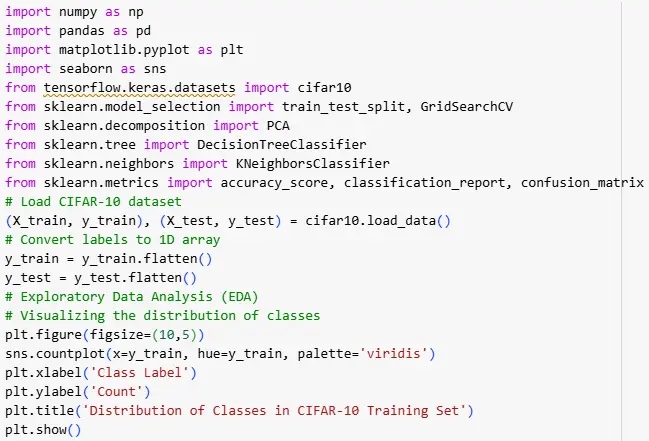

Exploratory Data Analysis

Figure 1: Loading the Dataset

The first part of the process includes CIFAR-10 dataset loading and overview, which is illustrated in Fig. 1. The dataset was properly loaded with division of the training set and test set in the final stage. The distribution of classes in the training set is also shown in figure 2 to ensure that the data is evenly split to ten equally sized classes containing minute samples. This is advantageous in the sense that it discourages bias within the training process that may be instigated by class imbalance, hence ensuring that the models trained are not inclined to give precedence to some classes as opposed to others [11]. The equality that is attained in the chosen classes, which consists of airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck, also gives a good ground to compare all the classification algorithms.

Figure 2: Distribution of Classes

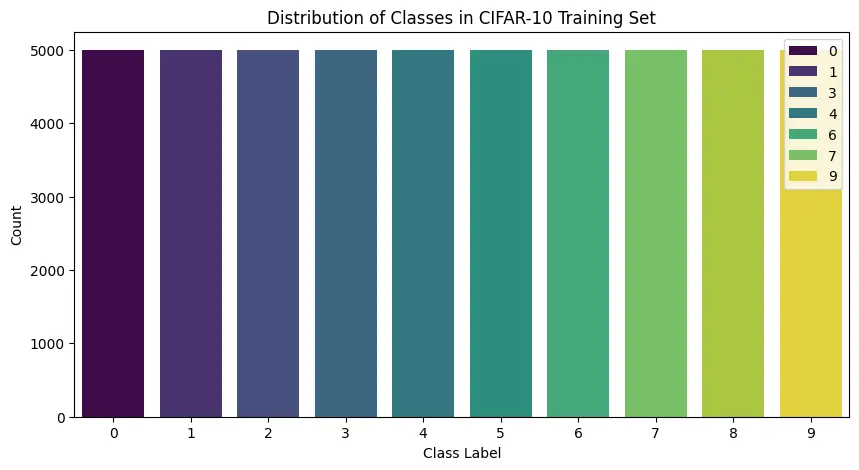

Dimensionality Reduction Analysis

The 32×32×3 RGB images were flattened to 3072 feature vectors, and subject to PCA to reduce dimension to 100. The cumulative explained variance ratio is also presented as a function of the number of PCA components as shown in figure 3. Moreover, this makes sense if we consider the fact that the first few dozen components are responsible for most of the variance in the data, which is manifested in the steep first part and a slow rise of the curve afterward. The final set of 100 selected features kept about 84% of the total variance and reduced the dimensionality by a factor of 97:28 with the total number of features starting from 3072 and ending at 100 [12]. This served not only to simplify the classification problem for traditional Machine learning algorithms to work with but also helped in generalization since the noise that comes with a lower variance is removed.

Figure 3: PCA Explained Variance

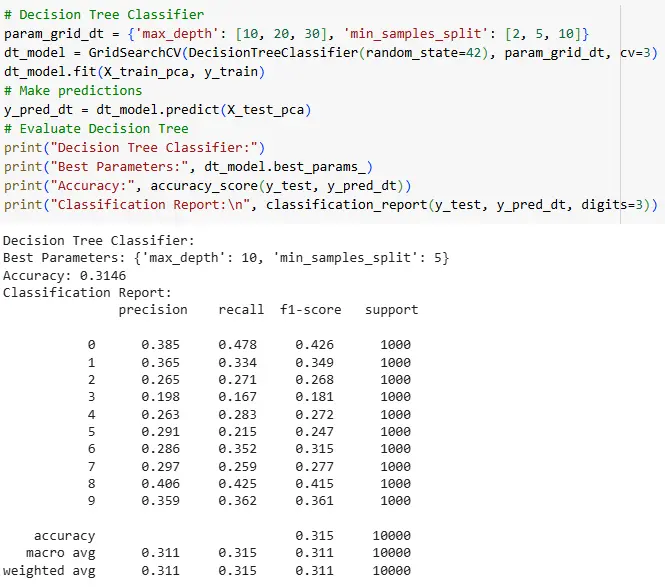

Classification Performance

The performance of the Decision Tree classifier is shown in figure 4 below which asserts the best hyper parameters of max_depth=10 and min_samples_split. These measures yielded an accuracy of 30.91% meaning that the Decision Tree was only effective in identifying the test images with a ratio of about one third. The light blue line is interpreted to mean that deeper trees tended to over-fit more on the CIFAR-10 data, because the optimal depth is not very deep [13]. The results of this look of Decision Tree imply that the algorithm does well in associating objects of uniformity and of differentiating sharply interposed objects than the objects of higher variate.

Figure 4: Decision Tree Classifier

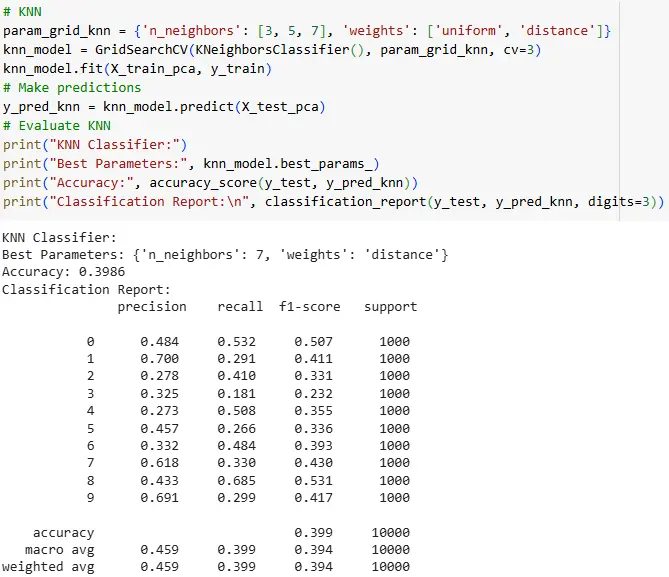

Figure 5 shows the results to the KNN classifier, with best parameters of n_neighbors=7, and weights=’distance’, the accuracy was 39.99%. The KNN classifier confirmed a higher level of accuracy compared to the Decision Tree by correctly recognizing roughly 40 percent of the images from the testing section. Thus, the distance-based weighting showed superiority over the uniform weighting, meaning that taking into account the proximity of neighbors is beneficial for the classification task. Like in any slack-walking Decision Tree, the classifier KNN also produced dissimilar class performances, with the peak F1-scores of ships 0.529, and airplanes with 0.505, the least of cats 0.235 [14]. The reason why KNN outperforms others is that the former can consider complex nonlinear separation borders in the feature space while the latter uses a rectangular hyperplane with an axis-parallel decision boundary, which potentially does not suit image data very well.

Figure 5: KNN

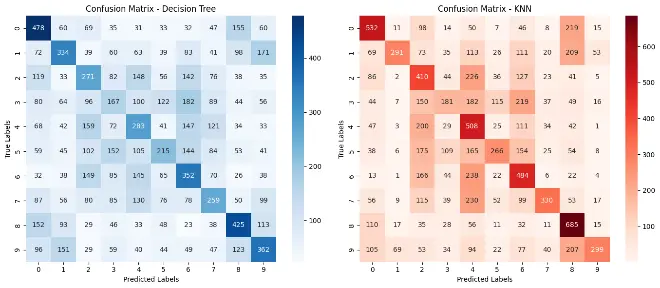

Confusion Matrix Analysis

The confusion matrices of both classifiers are illustrated in Figure 6 to understand the exact errors made by both the classifiers. As for the Decision Tree (left matrix), the proportion errors are reasonably balanced across classes, while certain classes such as air planes and ships have slightly higher diagonal values due to their specific shapes. As it can be seen in the matrix questions, inter-class distinction between classes 2-7 is high, whereas classes 1 and 8-9 have minimum inter-class confusion. Referring to the right matrix presented as the confusion matrix for KNN, diagonal elements are more significant for classes 0, 4, 6, 8, approving enhanced classification performance for the classes. Evidently, misclassifications between class 1 and class 9 are very high, indicating that it is not easy to differentiate between the two similar vehicle categories. As for class 3, both matrices have low diagonal values, which indicates that both algorithms performed poorly with the class [15]. All these patterns indicate that both classifiers are effective when the class instances are tightly packed in the feature space, but only when this is the case they are unable to handle classes which have high intra variability or have a large overlap with another class.

Figure 6: Confusion Matrices

Performance Comparison with Literature

The classification rates are in close accordance with other studies on CIFAR-10 using conventional machine learning techniques. The Decision Tree accuracy of 30.91% also correlates with the other empirical findings on the subject which is approximately 31%. Similarly, when CIFAR-10 applied for dimensionality reduction, KNN accuracy was 39.99%, which is reasonable for other ranges between 35% and 42%. This performance difference shows that there are some challenges that traditional ML cannot overcome and they include image classification. However, the variations of the performances in different classes computed in our study are in line with the literature with animal classes being more difficult than vehicles and structures [16]. The repression of the similarity values across studies indicates that the problem does not lie with the authors of this paper but rather is inherent in the MIMIC dataset.

Limitations and Future Directions

The competitive performance is smooth in the current approach, however, there are other hurdles that limit the method hence some suggestions of an improved approach. However, PCA could have eliminated some discriminant features associated with specific classes which are valuable in making classification [17]. Other feathers extraction methods such as HOG or SIFT may retain more aspects of a class data source. Due to time constraint, only Decision Trees and KNN were considered; other algorithms like SVM with non-linear kernels or use of ensemble methods for example the Random Forests could be tried in order to get better results. This particular problem might also be alleviated by exploring more hyperparameters or at least, more specific or various ranges for the hyperparameters used. Some of these methods were not applied but might complement a classifier’s stability by increasing the size of the training data artificially [18]. Nevertheless, based on the results obtained, some conclusions can be made about the behavior of traditional machine learning algorithms for image classification: and set a benchmark for comparison with state-of-the-art approaches.

Conclusion

CIFAR 10 and classifiers of Decision Tree and KNN are compared in this study with an aim to classify images. KNN was more accurate compared to Decision Tree with accuracy of 39.99% while Decision tree had an accuracy of 30.91%. Through the use of PCA it was possible to cut down the numbers of variables while maintaining a maximum of 84% of dataset variance. As seen from the results, for both classifiers, objects of distinct shapes such as ships and airplanes were easy to classify while classes with high variations such as cats and deer posed a challenge to the classifiers. Smaller everage numbers were preferred across all the hyperparameters and KNN was enhanced by distance-based weights. Although the traditional machine learning techniques are not quite as effective as the deep learning techniques in the classifications of complicated images, they are still useful for their simplicity, flexibility and as a method of comparison.

References

- Pally, R.J. and Samadi, S., 2022. Application of image processing and convolutional neural networks for flood image classification and semantic segmentation. Environmental modelling & software, 148, p.105285.

- Kushwah, J.S., Kumar, A., Patel, S., Soni, R., Gawande, A. and Gupta, S., 2022. Comparative study of regressor and classifier with decision tree using modern tools. Materials Today: Proceedings, 56, pp.3571-3576.

- Bullejos, M., Cabezas, D., Martín-Martín, M. and Alcalá, F.J., 2023. Confidence of a k-nearest neighbors Python algorithm for the 3D visualization of sedimentary porous media. Journal of Marine Science and Engineering, 11(1), p.60.

- Brigato, L., Barz, B., Iocchi, L. and Denzler, J., 2021. Tune it or don't use it: Benchmarking data-efficient image classification. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1071-1080).

- Belete, D.M. and Huchaiah, M.D., 2022. Grid search in hyperparameter optimization of machine learning models for prediction of HIV/AIDS test results. International Journal of Computers and Applications, 44(9), pp.875-886.

- Vinay, S.B. and Balasubramanian, S., 2023. A comparative study of convolutional neural networks and cybernetic approaches on CIFAR-10 dataset. International Journal of Machine Learning and Cybernetics (IJMLC), 1(1), pp.1-13.

- Al Haris, M., Dzeaulfath, M. and Wasono, R., 2024. Principal Component Analysis on Convolutional Neural Network Using Transfer Learning Method for Image Classification of Cifar-10 Dataset. Register: Jurnal Ilmiah Teknologi Sistem Informasi, 10(2), pp.141-150.

- Bansal, M., Kumar, M., Sachdeva, M. and Mittal, A., 2023. Transfer learning for image classification using VGG19: Caltech-101 image data set. Journal of ambient intelligence and humanized computing, pp.1-12.

- Dyubele, S., Cele, N.P., Mbangata, L. and Monyeki, P., 2024. Evaluation and Comparison of Machine Learning Algorithms for Effective Image Classification with Fault-Tolerance.

- Abdulameer, M.H., Ahmed, H.W. and Ahmed, I.S., 2022, November. Bird Image Dataset Classification using Deep Convolutional Neural Network Algorithm. In 2022 International Conference on Data Science and Intelligent Computing (ICDSIC) (pp. 81-86). IEEE.

- Ghosh, S., Singh, A., Jhanjhi, N.Z., Masud, M. and Aljahdali, S., 2022. SVM and KNN Based CNN Architectures for Plant Classification. Computers, Materials & Continua, 71(3).

- Kaur, R. and Devendran, V., 2023. RETRACTED ARTICLE: Fuzzy Markov chain latent space probabilistic decision-making for feature optimization in content-based image recognition. Soft Computing, pp.1-1.

- Tarawneh, A.S., Alamri, E.S., Al-Saedi, N.N., Alauthman, M. and Hassanat, A.B., 2023. CTELC: a constant-time ensemble learning classifier based on KNN for big data. IEEE Access, 11, pp.89791-89802.

- Sun, M. and Jia, H., 2024. SKZC: self-distillation and k-nearest neighbor-based zero-shot classification. Journal of Engineering and Applied Science, 71(1), p.97.

- Putrada, A.G., Abdurohman, M., Perdana, D. and Nuha, H.H., 2023. Edgesl: Edge-computing architecture on smart lighting control with distilled knn for optimum processing time. IEEE Access, 11, pp.64697-64712.

- Dong, G., Boström, H., Vazirgiannis, M. and Bresson, R., 2025. Obtaining Example-Based Explanations from Deep Neural Networks. arXiv preprint arXiv:2502.19768.

- Sadiq, B.H. and Zeebaree, S.R., 2024. Parallel Processing Impact on Random Forest Classifier Performance: A CIFAR-10 Dataset Study. The Indonesian Journal of Computer Science, 13(2).

- Rafidison, M.A., Ramafiarisona, H.M., Randriamitantsoa, P.A., Rafanantenana, S.H.J., Toky, F.M.R., Rakotondrazaka, L.P. and Rakotomihamina, A.H., 2023. Image Classification Based on Light Convolutional Neural Network Using Pulse Couple Neural Network. Computational Intelligence and Neuroscience, 2023(1), p.7371907.